Rule-based in comparison with corpus-based

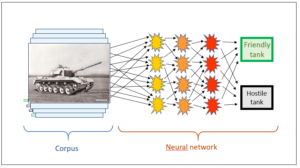

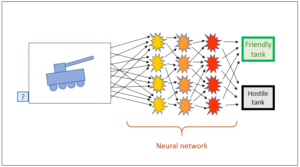

Corpus-based AI (the “Tanks” type; cf. introductory AI post) successfully overcame its weaknesses (cf. preceding post). This was the result of a combination of “brute force” (improved hardware) and an ideal window of opportunity, i.e. when during the super-hot phase of internet expansion, companies such as Google, Amazon, Facebook and many others were able to collect large volumes of data and feed their data corpora with them – and a sufficiently big data corpus is the linchpin of corpus-based AI.

Brute force was not enough for rule-based AI, however, nor was there any point in collecting lots of data, since data also have to be organised for rule construction – and largely manually at that, i.e. by human expert specialists.

Challenge 1: different mentalities

Not everyone is equally fascinated by the process of building algorithms. Building algorithms requires a particular faculty of abstraction combined with a very meticulous vein – with regard to abstractions, at any rate. No matter how small an error in the rule construction may be, it will inevitably have an impact. Mathematicians possess the consistently meticulous mentality that is called for here, but natural scientists and engineers are also favourably characterised by it. Of course, accountants must also be meticulous, but AI rule construction additionally requires creativity.

Salespersons, artists and doctors, however, work in a different field. Abstractions are often incidental; the importance lies in what is tangible and specific. Empathy for other people can also be very important, or someone has to be able to act with speed and precision, as is the case with surgeons. These characteristics are all very valuable, but they are less relevant to algorithm construction.

This is a problem for rule-based AI because rule construction requires the skills of one camp and the knowledge of the other: it requires the mentality that makes a good algorithm designer combined with the way of thinking and the knowledge of the specialist field to which the rules refer. Such combinations of specialist knowledge with a talent for abstraction are rare. In the hospitals in which I worked, both cultures were quite clearly visible in their separateness: on the one hand the doctors, who at best accepted computers for invoicing or certain expensive technical devices but had a low opinion of information technology in general, and on the other hand the computer scientists, who did not have a clue about what the doctors did and talked about. The two camps simply avoided each other most of the time. Needless to say, it was not surprising that the expert systems designed for medical purposes only worked for very small specialist fields – if they had progressed beyond the experimentation stage at all.

Challenge 2: where can I find the experts?

Experts who are creative and equally at home in both mentality camps are obviously hard to find. This is aggravated by the fact that there are no training facilities for such experts. Equally realistic are the following questions: where are the instructors who are conversant with the current challenges? Which diplomas are valid for what? And how can an investor in this new field evaluate whether the experts employed are fit for purpose and the project is moving in the right direction?

Challenge 3: the sheer volume of detailed rules required

The fact that a large volume of detailed knowledge is required to be able to draw meaningful conclusions in a real situation was already a challenge for corpus-based AI. After all, it was only with really large corpora, i.e. thanks to the internet and a boost in computer performance, that it succeeded in gathering the huge volume of detailed knowledge which is one of the fundamental prerequisites for every realistic expert system.

For rule-based AI, however, it is particularly difficult to provide the large volume of knowledge since this provision of knowledge requires people who manually package this large volume of knowledge into computer-processable rules. This is very time-consuming work, which additionally requires hard-to-find human specialist experts who are able to meet the above-mentioned challenges 1 and 2.

In this situation, the question arises as to how larger-scale rule systems which actually work can be built at all. Could there be any possibilities for simplifying the construction of such rule systems?

Challenge 4: complexity

Anyone who has ever tried to really underpin a specialist field with rules discovers that they quickly encounter complex questions to which they find no solutions in the literature. In my field of Natural Language Processing (NLP), this is obvious. The complexity cannot be overlooked here, which is why it is imperative to deal with it. In other words: the principle of hope is not adequate to the task; rather, the complexity must be made the subject of debate and be studied intensively.

What complexity means and how it can be countered will be the subject matter of a further post. Of course, complexity must not result in an excessive increase in rules (cf. challenge 3). The question which therefore arises for rule-based AI is: how can we build a rule system which takes into consideration the wealth of details and complexity while still remaining simple and manageable?

The good news is: there are definitely answers to this question.

In a following post, the challenges will be specified.

This is a post about artificial intelligence.

Translation: Tony Häfliger and Vivien Blandford