Have the neural networks outpaced the rule-based systems?

It cannot be ignored: corpus-based AI has overtaken rule-based AI by far. Neural networks are making the running wherever we look. Is the competition dozing? Or are rule-based systems simply incapable of yielding equivalent results to those of neural networks?

My answer is that both methods are predisposed for performing very different functions as a matter of principle. A look at their respective modes of action makes clear what the two methods can usefully be employed for. Depending on the problem to be tackled, one or the other has an advantage.

Yet the impression remains: the rule-based variant seems to be on the losing side. Why is that?

In what dead end has rule-based AI got stuck?

In my view, rule-based AI is lagging behind because it is unwilling to cast off its inherited liabilities – although doing so would be so easy. It is a matter of

- acknowledging semantics as an autonomous field of knowledge,

- using complex concept architectures,

- integrating an open and flexible logic (NMR).

We have been doing this successfully for more than 20 years. What do the three points mean in detail?

Point 1: acknowledging semantics as an autonomous field of knowledge

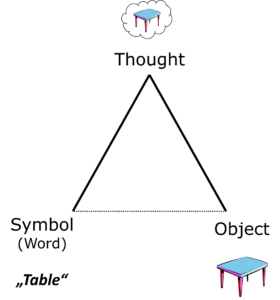

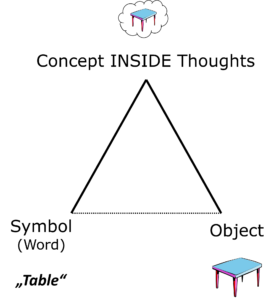

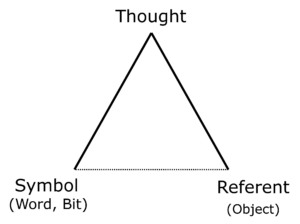

Usually, semantics is considered to be part of linguistics. In principle, there would not be any objection to this, but linguistics harbours a trap for semantics which is hardly ever noticed: linguistics deals with words and sentences. The error consists in perceiving meaning, i.e. semantics, through the filter of language, and assuming that its elements have to be arranged in the same way as language does with words. Yet language is subject to one crucial limitation: it is linear, i.e. sequential – one letter follows another, one word comes after another. It is impossible to place words in parallel next to each other. When we are thinking, however, we are able to do so. And when we investigate the semantics of something, we have to do so in the way we think and not in the way we speak.

Thus we have to find such formalisms for the concepts as occur in thought. The limitation imposed by the linear sequence of the elements and the resulting necessity to reproduce compounds and complex relational structures with grammatical tricks in a makeshift way, and differently in every language – this structural limitation does not apply to thinking, and this results in structures on the side of semantics that are completely different from those on the side of language.

Word ≠ concept

What certainly fails to work is a simple “semantic” annotation of words. A word can have many and very different meanings. One meaning (= a concept) can be expressed with different words. If we want to analyse a text, we must not look at the individual words but always at the general context. Let’s take the word “head”. We may speak of the head of a letter or the head of a company. We cannot integrate the context into our concept by associating the concept of <head< with other concepts. Thus there is a <body part<head< and a <function<head<. The concept on the left (<body part<) then states the type of the concept on the right (<head<). We are thus engaged in typification. We look for the semantic type of a concept and place it in front of the subconcept.

Consistantly composite data elements

The use of typified concepts is nothing new. However, we go further and create extensive structured graphs, which then constitute the basis for our work. This is completely different from working with words. The concept molecules that we use are such graphs possess a very special structure to ensure that they can be read easily and quickly by both people and machines. This composite representation has many advantages, among them the fact that combinatorial explosion is countered very simply and that the number of atomic concepts and rules can thus be drastically cut. Thanks to typification and the use of attributes, similar concepts can be refined at will, which means that by using molecules we are able to speak with a high degree of precision. In addition, the precision and transparency of the representation have very much to do with the fact that the special structure of the graphs (molecules) has been directly derived from the multifocal concept architecture (cf. Point 2).

Point 2: using complex concept architectures

Concepts are linked by means of relations in the graphs (molecules). The above-mentioned typification is such a relation: when the <head< is perceived as a <body part<, then it is of the <body part< type, and there is a very specific relation between <head< and <body part<, namely a so-called hierarchical or ‘is-a’ relation – the latter because in the case of hierarchical relations, we can always say ‘is a”, i.e. in our case: the <head< is a <body part<.

Typification is one of the two fundamental relations in semantics. We allocate a number of concepts to a superordinate concept, i.e. their type. Of course this type is again a concept and can therefore be typified again in turn. This results in hierarchical chains of ‘is-a’ relations with increasing specification, such as <object<furniture<table<kitchen table<. When we combine all the chains of concepts subordinate to a type, the result is a tree. This tree is the simplest of the four types of architecture used for an arrangement of concepts.

This tree structure is our starting point. However, we must acknowledge that a mere tree architecture has crucial disadvantages which preclude the establishment of semantics which are really precise. Those who are interested in the improved and more complex types of architecture and their advantages and disadvantages, will find a short description of the four types of architecture on the website of meditext.ch.

In the case of the concept molecules, we have geared the entire formalism, i.e. the intrinsic structure of the rules and molecules themselves, to the complex architectures. This has many advantages, for the concept molecules now have precisely the same structure as the axes of the multifocal concept architecture. The complex folds of the multifocal architecture can be conceived of as a terrain, with the dimensions or semantic degrees of freedom as complexly interlaced axes. The concept molecules now follow these axes with their own intrinsic structure. This is what makes computing with molecules so easy. It would not work like this with simple hierarchical trees or multidimensional systems. Nor would it work without consistently composite data elements whose intrinsic structure follows the ramifications of the complex architecture almost as a matter of course.

Point 3: integrating an open and flexible logic (NMR)

For theoretically biased scientists, this point is likely to be the toughest, for classic logic appears indispensable to most of them, and many bright minds are proud of their proficiency in it. Classic logic is indeed indispensable – but it has to be used in the right place. My experience shows me that we need another logic in NLP (Natural Language Processing), namely one that is not monotonic. Such non-monotonic reasoning (NMR) enables us to attain the same result with far fewer rules in the knowledge basis. At the same time, maintenance is made easier. Also, it is possible for the system to be constantly developed further because it remains logically open. A logically open system may disquiet a mathematician, but experience shows that an NMR system works substantially better for the rule-based comprehension of the meaning of freely formulated text than a monotonic one.

Conclusion

Today, the rule-based systems appear to be lagging behind the corpus-based ones. This impression is deceptive, however, and derives from the fact that most rule-based systems have not yet succeeded in jumping ahead of themselves and becoming more modern. This is why they are either

- only applicable for ckear tasks in a small and well defined domain , or

- very rigid and therefore hardly employable, or

- they require an unrealistic use of resources and become unmaintainable.

If, however, we use consistently composite data elements and a higher degree of concept architectures, and if we deliberately refrain from monotonic conclusions, a rule-based system will enable us to get further than a corpus-based one – for the appropriate tasks.

Rule-based and corpus-based systems differ a great deal from each other, and depending on the task in hand, one or the other has the edge. I will deal with this in a later post.

The next post will deal with the current distribution of the two AI methods.

This is a post about artificial intelligence.

Translation: Tony Häfliger and Vivien Blandford