IF-THEN and Time

It’s a commonly held belief that there’s nothing complicated about the idea of IF-THEN from the field of logic. However, I believe this overlooks the fact that there are actually two variants of IF-THEN that differ depending on whether the IF-THEN in question possesses an internal time element.

Dynamic (real) IF-THEN

For many of us, it’s self-evident that the IF-THEN is dynamic and has a significant time element. Before we can get to our conclusion – the THEN – we closely examine the IF – the condition that permits the conclusion. In other words, the condition is considered FIRST, and only THEN is the conclusion reached.

This is the case not only in human thinking, but also in computer programs. Computers allow lengthy and complex conditions (IFs) to be checked. These must be read from the computer’s memory by its processor. It may be necessary to perform even smaller calculations contained in the IF statements and then compare the results of the calculations with the set IF conditions. These queries naturally take time. Even though the computer may be very fast and the time needed to check the IF minimal, it is still measurable. Only AFTER checking can the conclusion formulated in the computer language – the THEN – be executed.

In human thinking, as in the execution of a computer program, the IF and the THEN are clearly separated in time. This should come as no surprise, because both the sequence of the computer program and human thinking are real processes that take place in the real, physical world, and all real-world processes take time.

Static (ideal) IF-THEN

It may, however, surprise you to learn that in classic mathematical logic the IF-THEN takes no time at all. The IF and the THEN exist simultaneously. If the IF is true, the THEN is automatically and immediately also true. Actually, even speaking of a before and an after is incorrect, since statements in classical mathematical logic always take place outside of time. If a statement is true, it is always true, and if it is false, it is always false (= monotony, see previous posts).

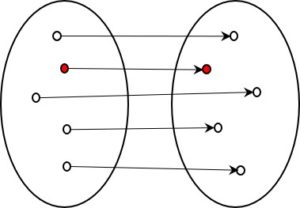

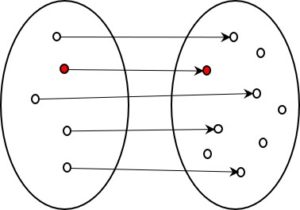

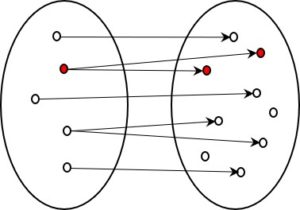

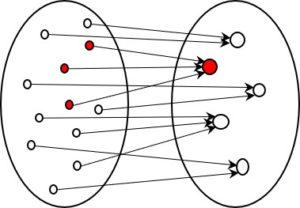

The mathematical IF-THEN is often explained using Venn diagrams (set diagrams). In these visualisations, the IF may, for example, be represented by a set that is a subset of the THEN set. For mathematicians, IF-THEN is a relation that can be derived entirely from set theory. It’s a question of the (unchangeable) states of true or false rather than of processes, such as thinking in a human brain or the execution of a computer program.

Thus, we can distinguish between

- Static IF-THEN:

In ideal situations, i.e. in mathematics and in classical mathematical logic. - Dynamic IF-THEN:

In real situations, i.e. in real computer programs and in the human brain.

Dynamic logic uses the dynamic IF-THEN

If we are looking for a logic that corresponds to human thinking, we must not limit ourselves to the ideal, i.e. static, IF-THEN. The dynamic IF-THEN is a better match for the normal thought process. This dynamic logic that I am arguing for takes account of time and needs the natural – i.e. the real and dynamic – IF-THEN.

If time is a factor and the world may be a slightly different place after the first conclusion has been drawn, it matters which conclusion is drawn first. Unless you allow two processes to run simultaneously, you cannot draw both conclusions at the same time. And even if you do, the two parallel processes can influence each other, complicating the matter still further. For this reason along with many others, dynamic logic is much more complex than the static variant. This increases our need for a clear formalism to help us deal with this complexity.

Static and dynamic IF-THEN side by side

The two types of IF-THEN are not mutually exclusive; they complement each other and can coexist. The classic, static IF-THEN describes logical states that are self-contained, whereas the dynamic variant describes logical processes that lead from one logical state to another.

This interaction between statics and dynamics is comparable with the situation in physics, where we find statics and dynamics in mechanics, and electrostatics and electrodynamics in the study of electricity. In these fields, too, the static part describes the states (without time) and the dynamic part the change of states (with time).

This is a blog post about dynamic logic. The next post specifies the topic of the dynamic IF-THENs.

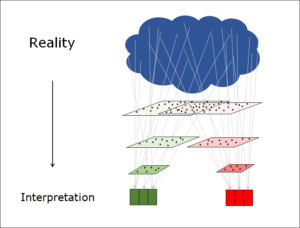

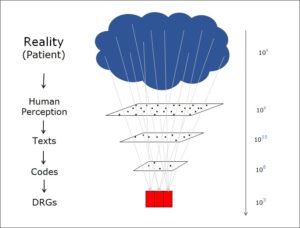

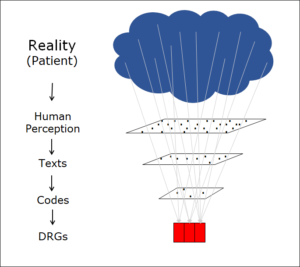

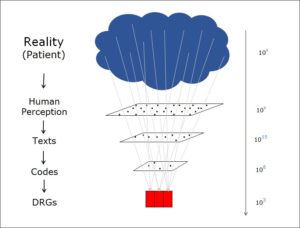

A glass of water contains a huge amount of water molecules, all moving at different speeds and in different directions. These continuously collide with other water molecules, and their speed and direction of travel changes with each impact. In other words, the glass of water is a typical example of a real object that contains more information than an external observer can possibly deal with.

A glass of water contains a huge amount of water molecules, all moving at different speeds and in different directions. These continuously collide with other water molecules, and their speed and direction of travel changes with each impact. In other words, the glass of water is a typical example of a real object that contains more information than an external observer can possibly deal with.