How resonances explain our musical scales and chords

Have you ever wondered why the musical scales in all musical cultures, whether in the jungle, in the concert hall or in the football stadium, span precisely an octave? Or why children without any musical education the world over quite spontaneously find the major triad “beautiful”?

The explanation lies in the resonances. No matter how different musical cultures are, they still have a common core. This consists of the resonances which emerge between the notes of the musical scales and chords.

Mathematics and physics in music

Classical music theory is aware of the fact that there are mathematical fractions in the intervals, but it fails to explain how these fractions come into being and what they have to do with our perception of music.

The fractions represent the mathematics, but behind the mathematics, there are physical reasons. As is known, sound waves are able to reinforce or weaken each other. These physical compatibilities can be calculated if we compare the pitches of the notes involved. A simple rule explains how two notes start to resonate and what happens when three notes or more are involved. In this way, many peculiarities of the various musical scales and chords can be explained.

In this context, we ask the following questions:

- Why do all the musical scales worldwide span an octave?

- Why do perfect fifths and fourths also exist in virtually all musical scales?

- Why is the overtone series not a musical scale?

- What is the most resonant scale, and why does it occur in all cultures? (Spoiler: it is not the usual major scale, but almost.)

- How do three and more notes mix?

- What are the ten most resonant intervals within an octave?

- Why don’t the ten most resonant intervals within an octave constitute a meaningful musical scale?

- In contrast to this, what are meaningful musical scales?

- What keeps the notes of the major scale together, what keeps the notes of the minor scale together?

- Why is the major triad so consonant?

- What is a leading note in physical terms? What is the tritone?

- How did the division of the octave into 12 notes come about?

- How do complex chords such as upper structures work?

All these questions can be answered with the physics of sound waves.

Intervals, frequencies and fractions

How does the connection between mathematics and physics come about in harmony? To find out, we have a look at the most important characteristic of a note, namely its frequency, which determines its pitch. Next, we look at how an interval which is constituted by two notes can be defined as a mathematical fraction.

Sound waves and frequencies

In physical terms, a note is a sound wave. A sound wave is a vibration in the air or in an object (such as a string), whose most important characteristic is its frequency, i.e. the number of vibrations per second. Thus, the standard pitch a’ has a frequency of 440Hz, so the standard pitch vibrates to and fro 440 times per second – on the string, in the air and in the inner ear.

Intervals and fractions

When two notes sound at the same time, they interrelate in an interval. Depending on how their frequencies interrelate, they mingle in a quieter or tenser manner. This is an effect that we perceive with our hearing. The intervals are calculated from the ratio of the frequencies to each other. In mathematical terms, this ratio is a fraction f1 / f2, i.e. the frequency of the higher note is divided by the frequency of the lower note.

Sample calculation for intervals

The calculation of intervals is very simple.

- If both notes are identical, then f1 = f2 and thus the fraction is f2 / f2 = 1.

- If frequency f1 is twice as fast as f2, then

f1 = 2xf2 and the fraction is 2xf2 / f2 = 2.

Example:

a”: f1 = 880Hz

a’: f2 = 440Hz

à Interval a”/a’ = 880 / 440 = 2 - If frequency f1 is not a precise multiple of f2, then the interval does not result in an integer but in a true fraction:

Example:

e”: f1 = 660 Hz

a’: f2 = 440 Hz

à Interval e”/a’ = 660 / 440 = 3/2

This knowledge enables us to explain our musical scales and chords in simple and consistent terms. And if the above calculations have scared you off because after all, you are a musician and not a mathematician or an accountant, I can reassure you: more mathematics than that will not be necessary at all. These are simple fractions with very small figures the way you learnt it in primary school.

Three degrees of resonance

The resonances have a great deal to do with these intervals and the fractions that characterise them.

The three sample calculations a) to c) for intervals already characterise the three basic physical degrees of resonance:

- 1st degree resonance: both frequencies are identical.

- 2nd degree resonance: the higher frequency vibrates with an integer multiple of the lower frequency.

- 3rd degree resonance: the higher frequency vibrates with a fractional multiple of the lower frequency.

The distinction between the three degrees is essential

The distinction between three degrees as described above takes us further towards our goal of explaining the different musical scales:

- Resonances of the 1st degree are commonplace – everyone knows them – but they are also the strongest resonances. The mathematically calculable and physically explicable strength of the resonance will also accompany us in our further considerations concerning the other degrees.

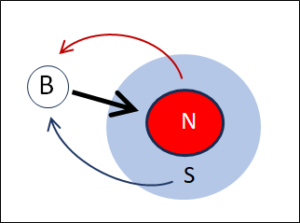

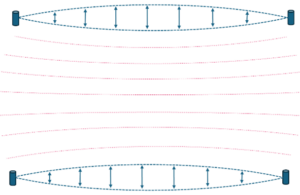

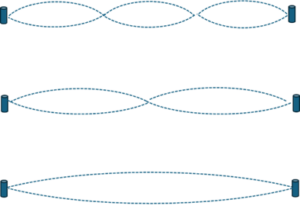

- Resonances of the 2nd degree: if the higher tone vibrates with an integer multiple of the lower tone, then this is an overtone. As a rule, admixtures of overtones f1 = n x f2 to a basic tone f2 occur naturally in all vibrating objects. It is also correspondingly simple to provide a physical reason for the calculation rule f1 = n x f2 for overtones.

.

Basic vibration (bottom), first and second overtone Conventional harmony is based on these overtones, but there is a problem: the higher the overtone, the weaker the natural resonance. However, the higher overtone would be required to provide a physical reason for musical scales. In this instance, the conventional explanation of the musical scales fails. → The series of overtones is not a musical scale

- Resonance of the 3rd degree: it is rather less well known that ‘fractional’ intervals can be highly resonant and that in many and decisive cases they produce much stronger resonances than overtones. The resonance of ‘fractional’ intervals can easily be explained in terms of physics. It can also be easily observed in practice how fractional intervals are perfectly resonant even though they are not overtones. → Experiment with the fifth (Youtube, in German)

It is this resonance of the 3rd degree that is responsible for our musical scales and chords. It explains which intervals are particularly resonant, how several tones mix and how the characteristics of the musical scales and chords that we can perceive are generated. More about this in the following posts.

This is a post in the category musical scales

Translation: Tony Häfliger, Vivien Blandford

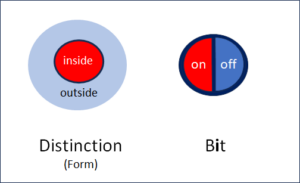

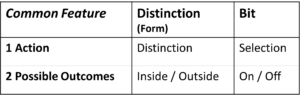

Fig. 3: Marked (m) and unmarked (u) space

Fig. 3: Marked (m) and unmarked (u) space