(This blog post continues the introduction to the dynamic IF-THEN.)

Several IF-THENs next to each other

Let’s have a look at the following situation:

IF A, THEN B

IF A, THEN C

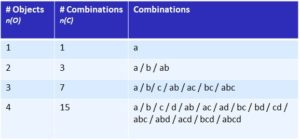

If a conclusion B and, at the same time, a conclusion C can be drawn from a premise A, then which conclusion is drawn first?

Static and dynamic logic

In terms of classical logic, this does not matter since A, B and C always exist simultaneously in a static system and do not change their truthfulness. Therefore it does not matter whether one or the other conclusion is drawn first.

This is completely different in dynamic logic – i.e. in a real situation. If I opt for B, it may be that I “lose sight” of option C. After all, the statement B is usually related to further other statements, and these options may continue to occupy my processor, which means that the processor does not have any time at all for statement C.

Dealing with contradictions

There is an additional factor: further conclusions drawn from statements B and C lead to further statements D, E, F, etc. In a static system, all the statements resulting from further valid conclusions must be compatible with each other. This absolute certainty does not exist in the case of real statements. Therefore it cannot be ruled out that, say, statements D and E contradict each other. And in this situation, it does matter whether we reach D or E first.

A dynamic system must be able to deal with this situation. It must be able to actively hold the contradictory statements D and E and “weigh them up against each other”, i.e. analyse their relevance and plausibility while taking their individual contexts into consideration – if you like, this is the normal way of thinking.

In this process, it matters whether I “weigh up” B or C first. Depending on the option I choose, I will end up in a totally different “field” of statements. It is certain that occasionally, statements from the two fields contradict each other. For static logic, this would be tantamount to the collapse of the system. For dynamic logic, however, this is perfectly normal – indeed, a contradiction is the reason for having a closer look at the system of statements from this position. It is the tension that drives the system and keeps the thought process active – until the contradictions are resolved.

Describing this dynamism of thinking is the objective of logodynamics.

Truth – a search process

The fact that the truthfulness of the statements is not determined from the start may be regarded as a weakness of the dynamic system. Then again, this is exactly our own human situation: we do NOT know from the start what is true and what isn’t, and we first have to develop our system of statements. Static logic is unable to tell us how this development works – it is precisely for this that we need a dynamic logic.

Thinking and time

In real thinking, time plays a part. It matters which conclusion is drawn first. Admittedly, this makes things in dynamic logic slightly more difficult. However, if we want to track down the processes in a real situation, we have to accept that real processes always take place in time. We cannot remove time from thinking – nor can we remove it from our logic.

Yet static logic does so. This is why it is only capable of describing one result of thinking, the final point of a process in time. What happens during thinking is the subject of logodynamics.

Determinism – a cherished habit

If I can conclude both B and C from A and if, depending on which conclusion I draw first, the thought process evolves in a different direction, then I will have to face another disagreeable fact: namely that I am unable to derive from the initial situation (i.e. the set of statements A that I accept as being truthful) what direction I will pursue. In other words: my thought process is not determined – at any rate not by the set of what I have already recognised.

On the one hand, this is regrettable, for I can never be quite sure whether I draw the right conclusions since I simply have too many options. On the other hand, this also provides me with freedom. At the moment when I must decide to follow the path through B or C first – and that without already seeing through the system as a whole, i.e. in my real situation – at this moment I also gain the freedom to make the decision myself.

Freedom – there is no certainty

Logodynamics thus explores the thought process for systems which still have to find truth. These systems, too, are not in a position to examine an unlimited number of conclusions at the same time. This is the real situation. This means that these systems have a certain arbitrariness in that they are capable of making decisions at their own discretion. The thought structures that emerge in the process, the advantages and disadvantages they have and what may be taken for granted, is explored by logodynamics.

It is clear that derivability cannot be taken for granted. This is regrettable, and we would prefer to be on the safe side. Yet it is only this uncertainty that enables us to think freely.

The dynamic IF-THEN is necessary

In terms of practical thinking, the point is that static logic is not equal to the process of finding truth. Static logic merely describes the found consistent system. The preceding discussion of whether the system resolves the contradictions and how it does so, will only become apparent through a logodynamic description.

In other words: static logic is incomplete. To examine the real thought process, the somewhat trickier dynamic logic is indispensable. It deprives us of certainty but provides us with a more realistic tool.

This is a blog post about dynamic logic. The preceding post made a distinction between dynamic and static logic IF-THEN.